[ad_1]

Keep in mind once we reported some market knowledge indicating Meta had snapped up 150,000 of Nvidia’s beastly H100 AI chips? Now it seems Meta needs to have greater than double that at 350,000 of the massive silicon issues by the top of the yr.

Most estimates put the value of an Nvidia H100 GPU at between $20,000 and $40,000. Meta together with Microsoft are Nvidia’s two greatest prospects for H100, so it is protected to imagine it will likely be paying lower than most.

Nevertheless, some primary arithmetic round Nvidia’s reported AI revenues and its unit shipments of H100 reveals that Meta cannot be paying an terrible lot if any lower than the decrease finish of that value vary, which works out to roughly $7 billion price of Nvidia AI chips over two years. For positive, then, on the very least we’re speaking about many billion of {dollars} being spent by Meta on Nvidia silicon.

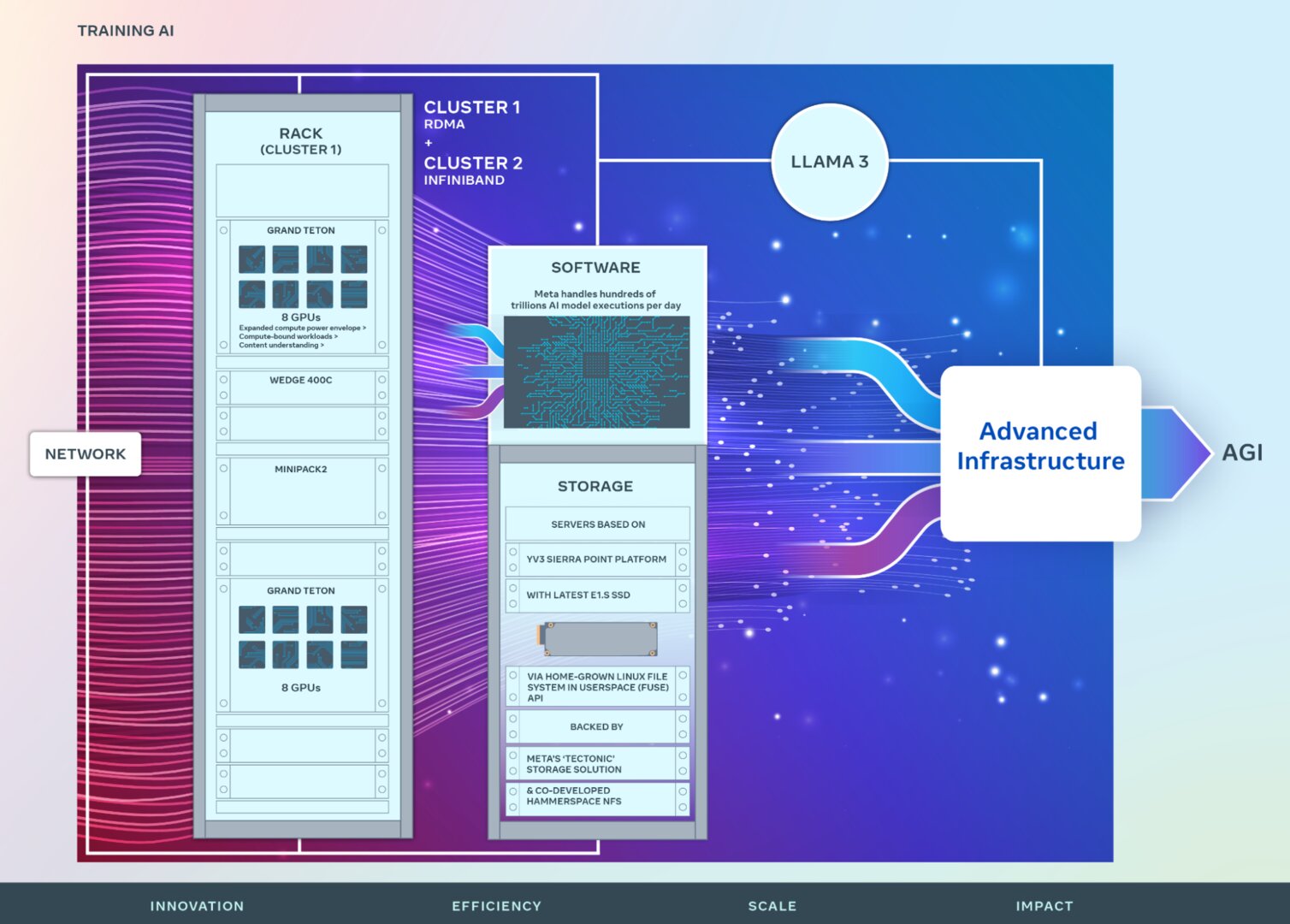

Meta has additionally now revealed some particulars (by way of ComputerBase) about how the H100s are applied. Apparently, they’re constructed into 24,576-strong clusters used for coaching language fashions. On the decrease finish per-unit H100 value estimate, that works out at $480 million price of H100s per cluster. Meta has offered perception into two barely totally different variations of those clusters.

To cite ComputerBase, one “depends on Distant Direct Reminiscence Entry (RDMA) over Converged Ethernet (RoCE) based mostly on the Arista 7800, Wedge400 and Minipack2 OCP parts, the opposite on Nvidia’s Quantum-2 InfiniBand answer. Each clusters talk by way of 400 Gbps quick interfaces.”

In all candour, we could not inform our Arista 7800 arse from our Quantum-2 InfiniBand elbow. So, all that does not imply a lot to us past the realisation that there is much more in {hardware} phrases to knocking collectively some AI coaching {hardware} than simply shopping for a bunch of GPUs. Hooking them up would seem like a significant technical problem, too.

But it surely’s the 350,000 determine that’s the actual eye opener. Meta was estimated to have purchased 150,000 H100s in 2023 and hitting that 350,000 goal means upping that to completely 200,000 extra of them this yr.

Based on the figures offered by analysis outfit Omnia late final yr, Meta’s solely rival for sheer quantity of H100 acquisition was Microsoft. Even Google was solely thought to have purchased 50,000 models in 2023. So it must ramp up its purchases by a really spectacular quantity to even come near what Meta is shopping for.

For what it is price, Omnia predicts that the general marketplace for these AI GPUs will probably be double the scale in 2027 that it was in 2023. So, Meta shopping for an enormous quantity of H100s in 2023 and but much more of them this yr suits the obtainable knowledge nicely sufficient.

What this all means for the remainder of us is anybody’s guess. It is onerous to foretell all the things from the influence this large demand for AI GPUs can have on availability of humble graphics-rendering chips for PC gaming, not to mention the small print of how upcoming AI will advance over the following yr or two.

As if these numbers weren’t sufficiently big, Omdia reckons Nvidia’s revenues for these massive knowledge GPUs will truly be double in 2027 what it’s at this time. They will not all be used for giant language fashions and comparable AI functions, however quite a lot of them will.

It is fascinating that Omdia is so bullish about Nvidia’s prospects on this market even supposing a lot of Nvidia’s greatest prospects on this market are literally planning on constructing their very own AI chips. Certainly, Google and Amazon already do, which might be why they do not purchase as many Nvidia chips as Microsoft and Meta.

Sturdy competitors can also be anticipated to return from AMD’s MI300 GPU and its successors, too, and there are newer startups together with Tenstorrent, led by one of the crucial extremely regarded chip architects on the planet, Jim Keller, attempting to get in on the motion.

One factor is for positive, nonetheless, all of those numbers do relatively make the gaming graphics market look puny. We have created a monster, people, and there is no going again.

[ad_2]

Source link